Research Paper: CoinRun: Overcoming goal misgeneralisation

Stuart Armstrong, Alexandre Maranhão, Oliver Daniels-Koch, Patrick Leask, Rebecca Gorman

Goal misgeneralisation is a key challenge in AI alignment -- the task of getting powerful Artificial Intelligences to align their goals with human intentions and human morality. In this paper, we show how the ACE (Algorithm for Concept Extrapolation) agent can solve one of the key standard challenges in goal misgeneralisation: the CoinRun challenge. It uses no new reward information in the new environment. This points to how autonomous agents could be trusted to act in human interests, even in novel and critical situations.

What is goal misgeneralisation?

Goal misgeneralisation is a problem in artificial intelligence (AI) where an AI agent has learned a goal based on a given environment, but is unable to transfer its knowledge to different environments. This is because the AI agent has only been exposed to a limited set of scenarios, and lacks the ability to generalise from those scenarios to new ones.

This means that the AI agent may fail to learn new goals or behaviours when it encounters a different environment. Solving the problem of goal misgeneralisation is vital for making safe and powerful AI systems that act in human interests and that actually work “in the wild”. Modern AIs degrade when confronted with out-of-distribution scenarios, which are inputs too far from those they were trained on, which is problematic as the real world is unpredictable and complex.

Source: OpenAI

Our CoinRun results

We revealed today our groundbreaking advancement of tackling the issue of misgeneralisation by teaching an AI to “think” in human-like concepts. This is the first time an AI lab has solved the misgeneralisation problem, opening the door to more precise, reliable and scalable AI.

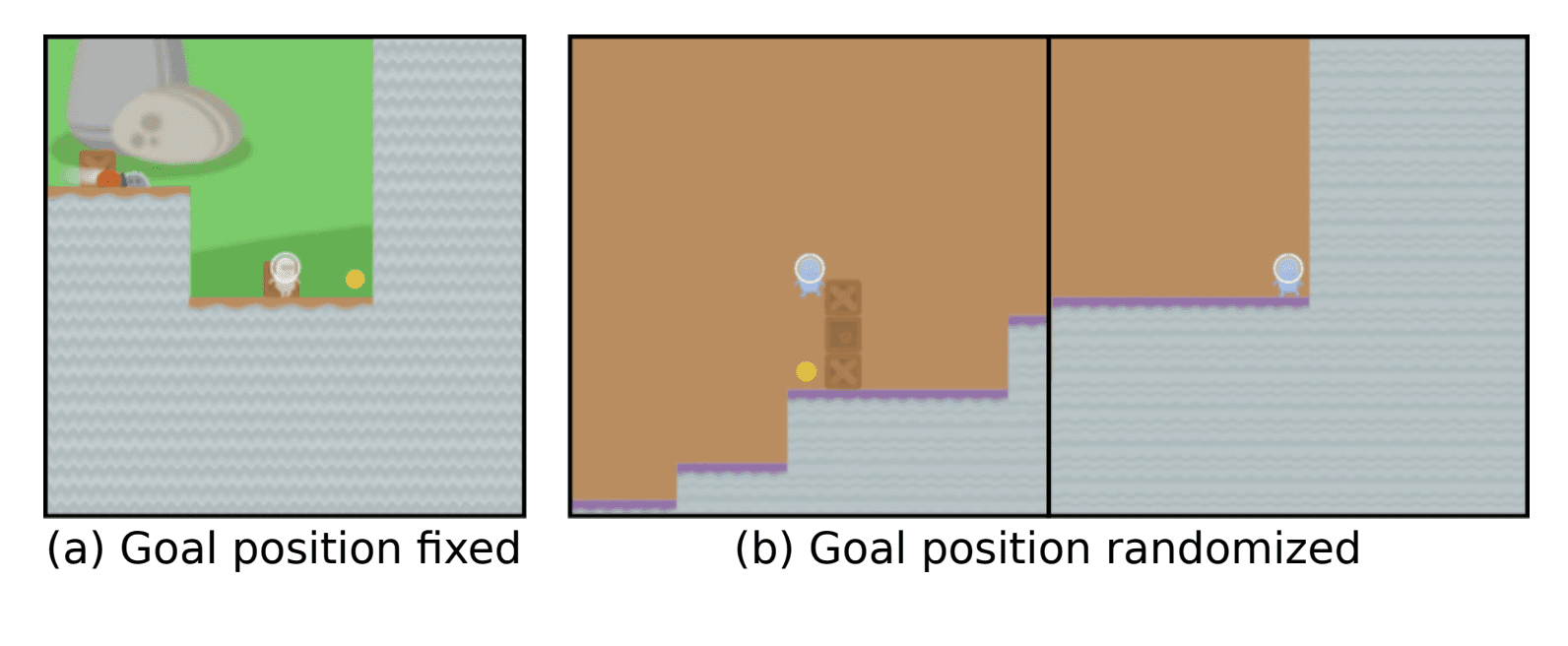

To achieve this milestone, we used the CoinRun misgeneralization benchmark, an Atari-style game released by researchers at Google DeepMind, the University of Cambridge, the University of Tubingen, and the University of Edinburgh. The goal of the benchmark is to test whether AI can deduce a complex goal when that goal is spuriously correlated with a simpler goal in its training environment. The AI is rewarded for getting a coin, which is always placed at the end of the level during the training period, but is placed in a random location during the testing period, without additional reward information being provided during the training period.

Prior to Aligned AI’s innovation, AIs trained on CoinRun believed the best way to play the game was to go to the right, while avoiding monsters and holes. Because the coin was always at the end of the level during training, this strategy seemed effective. When the AI encountered a new level where the coin was placed elsewhere in the level, it would ignore the coin and either miss it or get it only by accident. ACE (which stands for “Algorithm for Concept Extrapolation”), the new AI developed by Aligned AI, notices the changes in the test environment and figures out to go for the coin, even without new reward information - just as a human would.

Source: Arxiv

Why this is important

CoinRun is a simplified environment, however we believe that our technology can help to resolve goal misgeneralisation in our more complicated reality as well.

Progress in AI’s ability to generalise will be key if we are to see futuristic AI applications becoming a reality. Self-driving cars of today have hit pedestrians who aren’t on a crosswalk as a result of not being able to generalise their training data. Robots which struggle today in rooms with complex shadows or unexpectedly patterned floors should be able to operate more robustly. Solving goal misgeneralisation will also be key if we are to save humanity from the existential threat posed by AI; an AI agent that retains its capabilities out-of-distribution can leverage its capabilities when pursuing an incorrect goal to visit arbitrarily bad states.

Our method is a breakthrough for AI because it indicates that it is possible to make significant progress on one of the most fundamental and pervasive problems for AI safety and reliability, thus enabling AI systems to behave more predictably, efficiently, and beneficially in any situation or task.